US project seeks standard way to communicate research retractions

Journals communicate information on retracted research articles in a wide range of ways.Credit: Hafiez Razali/Shutterstock

In a bid to standardize how updates to scientific papers — such as retractions, corrections and expressions of concern — are communicated, the US National Information Standards Organization (NISO) has drawn up recommendations for publishers, journals, funders and others in the science ecosystem.

In October, NISO, a non-profit organization based in Baltimore, Maryland, that develops technical standards for institutions such as libraries and publishers, released the recommendations for public comment.

Jodi Schneider, an information scientist at the University of Illinois at Urbana–Champaign, was part of the Communication of Retractions, Removals, and Expressions of Concern (CREC) Working Group that drew up the guidelines. She spoke to the Nature Index about the project.

How did the project come about?

Part of NISO’s role is to identify what standards we need. In February 2021, we presented our research on retracted science to a global NISO conference held online. Out of that, there was enormous excitement around NISO doing something about the issue of how retractions are communicated.

It’s clear that even though there are relatively few retractions across the board, they can have a big impact. We saw this during the COVID-19 pandemic, with the retraction of two high-profile studies. As reported in 2021, more than 52% of the studies that cited these retracted papers did so without acknowledging that they had been pulled, despite the retractions receiving significant media attention at the time1.

When we analysed the citations of 7,813 retracted papers indexed in the biomedical database PubMed, we found that around 94% of studies that cited a retracted paper didn’t acknowledge that it had been retracted2. It became obvious to us that knowing whether something has been retracted was part of the problem.

It can be difficult for a reader to work out whether a paper has been pulled from the literature. That’s because journals display their retraction notices in different places, and often the retraction notice is not linked to the original paper. As a result, people continue to cite retracted research.

What changes do your guidelines propose?

Retractions need to be easy to spot for humans, as well as automated systems that are designed to scan the literature. So, we wrote a summary for NISO explaining what we think needs to happen, on the basis of our findings. It wasn’t about when something should be retracted, but rather about how the retraction should be communicated.

A large part of what we’re recommending is modifications in how retraction information — including article titles and author names — is shared. We recommend that all journals use the same format to label retracted papers, as well as those that have been flagged with other editorial notices.

What feedback did you receive?

Scholarly publishing has a diverse group of stakeholders, including journal editors, indexing databases, librarians and repositories, and these are affected by retractions in different ways. Issues that arise include, for example, whether repositories should remove public versions of retracted papers that they host, and whether indexing sites or aggregators should be responsible for making sure that retracted papers and their corresponding retraction notices are appropriately linked.

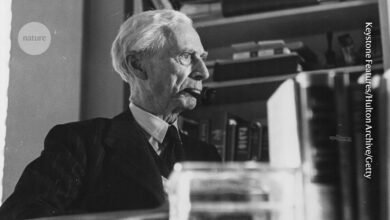

Jodi Schneider has helped to draw up guidelines to standardize how retractions are communicated.Credit: Thompson-McClellan Photography

Another issue concerns who needs to be notified when a publication is pulled, and who is responsible for such notifications. The decentralization of scholarly publishing is necessary, but it means that there’s no one authority scanning for new retractions or keeping track of old ones. Publishers are not equipped to carry out investigations when it’s a case of fraud, for instance — that’s the job of institutions.

The feedback we received highlighted concerns such as these, and how new processes could address them. The questions that arise include: how can repositories and digital preservation services be better incorporated into the retraction notification process? Should there be a separate DOI for cases in which items are retracted and then republished? How should differences in terminology (publishers have many definitions of ‘withdrawal’, for example) be handled? There’s also more attention being paid to what automated checks can be run to weed out problematic science before it is published.

Do you think your recommendations will catch on?

Yes, I think the publishing community is going to take this on board. Implementing the recommendations will benefit stakeholders in many ways. Automatic notifications about retractions could alert authors that they have cited a retracted paper, prompting them to investigate whether the retraction affects their work. Such notifications could also go to funders.

Beyond notifications, publishers could automatically mark the bibliographies of published articles if a paper they cite is retracted. PubMed Central, a free digital repository for research literature, is known for this kind of automatic marking.

There’s a lot of scepticism in the research world about the value that publishers are adding. By adopting these measures, publishers can show that they are making a long-term commitment to the content and to building trust and accountability.

This interview has been edited for length and clarity.